This blog post is Part Two of an edited transcription of the presentation “The Knowledge and How to Get it”, delivered by Hans Hillen (in person) and Ricky Onsman (via video) on 15 March at CSUN 2023.

Wait – let me read Part One first.

The Knowledge Life Cycle

In Part One, we talked about how we manage and output accessibility knowledge within our organization. And how our job is never done; we are always looking to update and improve our knowledge-based content.

Of course, this can get overwhelming at times; while there’s always more articles to write, training modules to build, and rule content to update, there are only four of us and we all have other responsibilities as well.

Many of you reading this will have experienced a similar state of overwhelmingness at some point. With never-ending knowledge and limited resources, how do you know what to prioritize and elevate to solidified content? And where does this knowledge originate?

That’s what we want to talk about next: recognizing and responding to knowledge opportunities, and following the ‘knowledge life cycle’.

Knowledge opportunities can be…

What is a knowledge opportunity? It’s basically anything that we can either turn into new content, or improve existing content with. A lot of these knowledge opportunities arise naturally of course, as part of our daily experience of being accessibility enthusiasts.

We have our own ideas about what content needs to be added, and we get inspired by all the other great minds in the accessibility space, whether it’s through their blog posts, social media presentations, or conference appearances. We would call those ‘external knowledge opportunities’.

But here we want to focus on are the ‘internal knowledge opportunities’, which we think are the most valuable. They are the requests and suggestions that come in from our own team members at TPGi, in particular the accessibility engineers that perform audits and tests every day.

These are the people that know exactly where content is lacking, as they deal with that content repeatedly. Our goal is to include them in the process by striving for their consensus and involvement as much as we can.

If and when those team members want to request or suggest new content, there are basically two paths for putting that on our road map: the direct path or the ARC Capture submission path.

The direct path involves contacting us through Teams chat or logging an issue on GitHub. We have a dedicated channel on Teams where people can ask questions and suggest improvements, and people can also contact us through Teams directly if they would rather ask their question in a one on one chat.

In addition, we also monitor any accessibility-related discussions on Teams, even those that aren’t specifically directed to us. Often these conversations lead to insights about new articles and courses.

Then there are the requests that are submitted during an audit. This is done through a feature built into our ARC Capture tool, which can be used by either the original tester or the QA team member who reviewed their work.

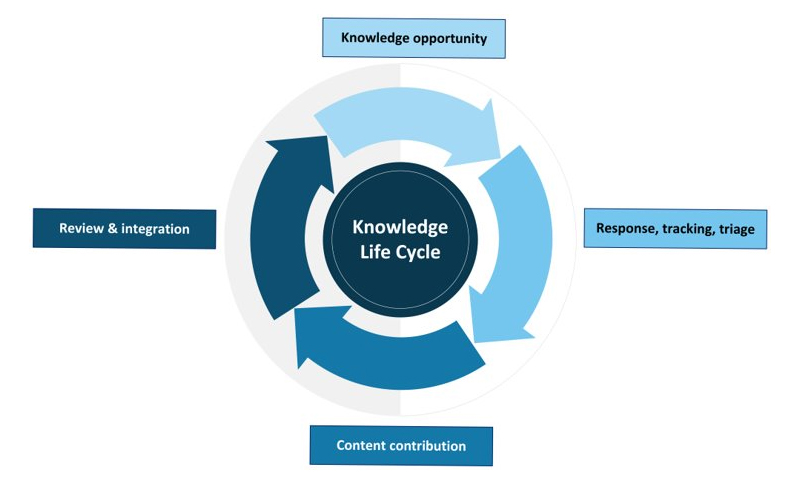

This basic diagram illustrates how we see the this knowledge cycle, which any organization can follow.

It consists of four arrows that together form a circle. The first arrow represents the recognition of an initial knowledge opportunity.

This points to the second arrow, which represents the Knowledge Team responding to this opportunity, tracking it and triaging it. We use GitHub to manage and keep track of these requests, but you can use whatever tool you prefer for bugs or user stories. Even if you can’t fix these requests right now, they can be fixed at a later point rather than getting lost in the ether.

Prioritize the people who deal with the content the most. In our case that’s the accessibility engineers. If they repeatedly look into a specific use case that doesn’t have a good solution written up and ready to go for them, the amount of their time wasted and frustration raised will compound with every audit. So we need to prioritize those issues that will speed up their work and keep them happy.

This leads to the third arrow: a contribution of new or updated content. In our case this is done in the form of GitHub pull requests, which can be submitted by someone from the Knowledge Center or someone else, often the original reporter.

This then leads to the fourth arrow, which represents the update being reviewed and integrated. If possible, keep that original reporter in the loop, for example by tagging them as a reviewer in the pull request. That way they can confirm whether the update matches what they were expecting.

This fourth arrow then points back to the first, which is where the cycle starts all over again.

Let’s look at two specific examples of knowledge opportunities.

Direct request on Teams

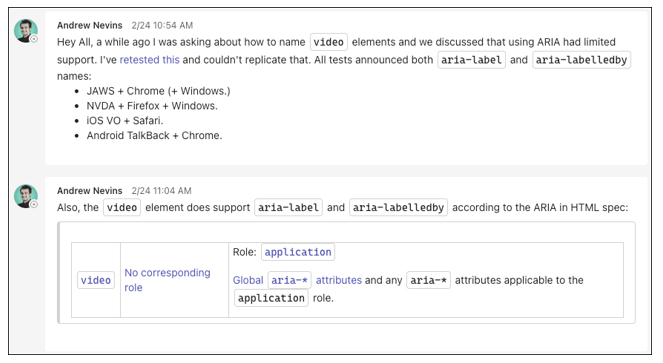

In this example, our colleague Andrew Nevins asked us a direct question on Teams. In part, it reads:

“Hey all, a while ago I was asking about how to name video elements and we discussed that using ARIA had limited support. I’ve retested this and couldn’t replicate that. All tests announced both

aria-labelandaria-labelledbynames…”

Then he provides some details about the browser screen reader combinations he get these results with, and adds a note about support in the ARIA in HTML spec.

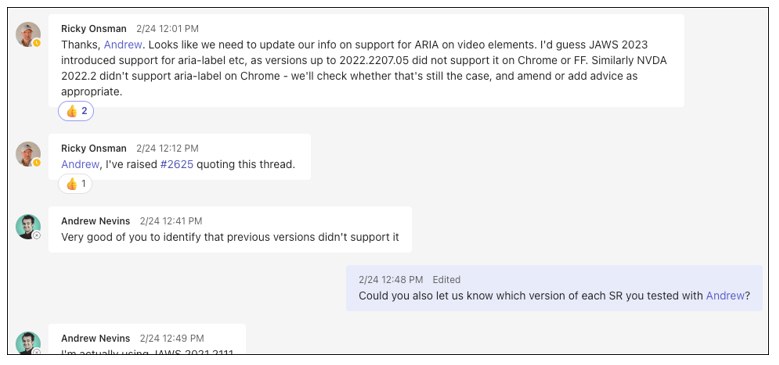

Ricky then looked into this, and responded:

“Thanks, Andrew. Looks like we need to update our info on support for ARIA on video elements. I’d guess JAWS 2023 introduced support for

aria-labeletc, as versions up to 2022 did not support it on Chrome or FF. Similarly NVDA 2022.2 didn’t supportaria-labelon Chrome – we’ll check whether that’s still the case, and amend or add advice as appropriate.”

Ricky lets Andrew know he has raised a GitHub issue, and Andrew is asked to provide some more detail about the screen reader versions he used.

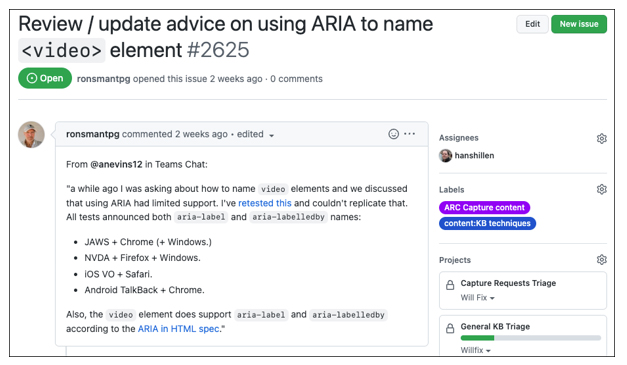

The GitHub issue Ricky logged can then be resolved by submitting a pull request to update the KnowledgeBase, which will be reviewed by other members of the team.

So, that’s an example of the Knowledge Center identifying a knowledge opportunity in the form of a direct request, and agreeing to undertake some research to resolve the request.

Capture request

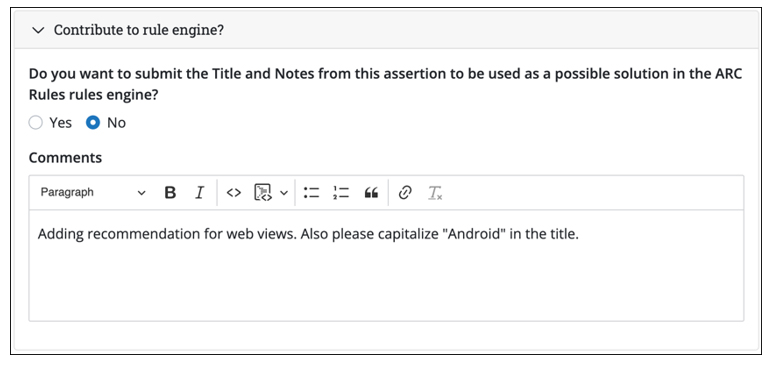

In this second example, a tester submitted a request while logging an issue during an audit. The screenshot shows a text field in the ARC Capture tool labeled ‘Contribute to rule engine?’

In this particular example, the request came from an engagement involving an Android app. The note provided for this request reads:

“Adding recommendation for web views. Also, please capitalize ‘Android’ in the title.”

In this note, the tester referred to their original write-up for the issue they were reporting on, and that write-up is included in the submitted request to the Knowledge Center. This way the tester can communicate to us what exactly they added, removed, or changed, and how they would like to see these changes turned into usable content.

All of this is done directly from the reporting tool, requiring as little diversion from the tester’s work as possible.

So far, we’ve mostly talked about how we approach the knowledge cycle by reacting to knowledge opportunities. We can also approach the knowledge cycle in a more proactive way.

Keeping the teams aware

You’ve read how the Knowledge Center reactively evolves our KnowledgeBase in response to TPGi team members, but there is another aspect to the Knowledge Life Cycle and that is proactively determining when we need to make changes.

We have to monitor developments in the digital accessibility industry and make sure the information and advice we provide is updated to reflect those developments.

We’ve already touched on how standards like WCAG continue to evolve and adapt to the modern world. It’s up to us to be aware of those developments and reflect them in our tools.

Changes to core markup, styling and scripting don’t come along very often but when they do, such as when HTML5 and CSS3 came out, we have to take them into account.

Browsers also constantly evolve, usually incrementally, but when a new version of a user agent starts implementing a previously unsupported ARIA attribute or property, we have to incorporate that change into our KnowledgeBase.

What makes that complicated is that browsers rarely synchronize what they support and what they don’t. Nor do they always announce changes when they make them.

The same goes for screen readers and other AT software: we have to check what’s been implemented in new versions of JAWS, NVDA, VoiceOver and TalkBack, as well as ZoomText, Dragon and more.

Even more complicating is that changes in these four groups (standards, code, browsers and AT) can, and usually do, affect each other. And not in a synchronized, organized way.

How do we convey that NVDA on Firefox – and only NVDA only on Firefox – now supports an ARIA property that enables text resizing in a different way?

This also leads to the question of how much something has to change before we amend our KnowledgeBase. Tricky.

How do we monitor changes?

TPGi encourages team members to actively participate in W3C Working Groups relevant to their work and / or of interest to them.

At last count, we had 20 TPGi people participating in 26 different Working Groups.

This gives us a collective insight into the work of the major agency developing and revising standards relevant to digital accessibility.

We share what we find out in whatever way works best for each of us: messages and links in Teams channels and social media are popular because they’re fast and easy.

In the Knowledge Center, part of our job is to review a dozen or more email newsletters that summarize and link to key industry news that might influence our work.

We also have a set of people we follow, whether by email newsletter, RSS or other means, who write about topics relevant to our work. Again, we’re fortunate that our Chief Accessibility Officer is one of these experts who blogs on relevant topics.

It’s important to note that we regard these as knowledge leads, no matter how solidly they’re researched and presented. The bottom line for us is our own testing across devices, platforms, operating systems and user agents before we discuss and come to a consensus view on whether something should be changed, added to or deleted from the KnowledgeBase.

Those changes aren’t made lightly, as they can affect the accuracy of previously conducted audits and advice provided.

We schedule regular reviews for KnowledgeBase items. The frequency depends to some extent on how much time and effort we can give to them – currently our aim is to review every item at least once every eight months.

In amongst all of this, we also try to identify topics that should be added to our training services, including the Tutor self-paced training modules that form part of the ARC platform for clients, and the stand alone training TPGi offers.

How do we implement changes?

You’ve seen the process the Knowledge Center follows when our team members raise issues or queries during the course of their work, and how we translate those into possible changes to our KnowledgeBase. That’s a critical part of our approach to updating and refining our knowledge in a reactive way.

Implementing improvements to the KnowledgeBase in a proactive way follows a similar process. Knowledge Center team members use Github to raise issues and make pull requests to update the KnowledgeBase. These require approvals from at least two other team members, a failsafe mechanism to catch any missteps before they become enshrined in the KnowledgeBase.

This process might also prompt us to write new KnowledgeBase articles, rules, solutions, and design patterns, or to update existing ones.

It’s a constant, iterative process of refinement and improvement that puts the emphasis on consensus among the Knowledge Center team members and risk management for our auditors and our clients.

How do we notify users of changes?

The obvious next question is how we notify our users of changes to the KnowledgeBase.

This is where we have to be proactive in announcing our work. We can (and do) write blog posts that draw attention to an issue and how it should be addressed but then we still have to let people know those blog posts have been published, and why they’re relevant.

We make announcements in appropriate Teams channels, but we still need to make sure all the right people see them.

Some people will see what we’re doing in GitHub – and some won’t.

What we’ve come up with is an email newsletter that goes to all TPGi team members, which summarizes what we’ve done in the previous month. This brings topical issues to the attention of our colleagues and serves the twin – and equally important – function of keeping the Knowledge Center itself front of mind.

The reality is we have to remind everyone we exist and what we’re there for. The newsletter helps us do that.

It also means we have to actually get the work done – sending around an email with not much in it is not going to get us the credibility we need. Fortunately, that hasn’t been an issue for us, so far – if there’s one thing we can rely on, it’s that there’s always plenty of work to be done.

Wrap-up

Don’t let perfect be the enemy of good. This means that when we’ve settled on an approach that works, that solves a problem, that is usable – use it. Don’t hold something back because it isn’t perfect, go with it because it’s good. Don’t get bogged down on something because it could be better, it’s important to keep moving.

Know your limitations and accept them, including time, budget, staffing and resources. Set goals and roadmaps that are achievable, plan how to achieve them, and work with what you have. Then understand that you may not achieve these goals according to these roadmaps, and that there will be a backlog.

Accept that consensus is not always possible. It’s good to involve as many people as possible when decisions are made, but at some point that decision will have to be made. We’ve tried to set up discussions involving the entire team to get everybody’s two cents, but often you still end up with a lot of different opinions.

WCAG isn’t everything. Yes, Guideline conformance is accepted as the baseline for calling digital content accessible but don’t be afraid to suggest, or even demand, more. Like most people and organizations in this business, we codify certain strategies as Best Practice, meaning it’s not covered by WCAG but should be implemented.

That doesn’t mean it’s arguable or optional. Not adopting Best Practice is a failure, it’s just not a WCAG failure.

Our work takes place in a global context. As it happens, the four members of the Knowledge Center team are located in the UK, New Zealand and Australia, which means the people we work with, and for, are in time zones across the US, Europe and Asia that can be up to 21 hours apart. That’s not always a disadvantage, we just have to take it into account.

Other Considerations

Our approach has to be collaborative, mutually supportive and aimed at arriving at a consensus position. We all approach accessibility with our own experiences, understandings, biases and preferences. Rather than try to eliminate those, we can draw on them to reach a mutually agreed way to address an issue.

We draw on and respect our individual and collective expertise, as well as the smarts of others. It’s up to us to constantly hone that expertise to remain authentic and credible. And we always respect lived experience, above all.

We have to be responsive and prompt in how we deal with queries put to us. This is hard. We have to deliver the most accurate advice in the shortest possible time. Triaging and prioritization is critical. If a response to a specific enquiry is going to take time for research and testing, we have to let the enquirer know.

Next Steps

Increase awareness. We still have a lot of work to do to ensure that everyone at TPGi knows what we do and how they can best make use of our work. Even for people who do know us, we’re not always the first port of call. It’s up to us change that by promoting our work better, offering credible solutions to issues and being available and willing to engage with our users.

Expand the team. We’d ideally like to have more people on the team, whether from outside or some of the highly skilled and experienced people working in other teams at TPGi. The reality is that we work for a commercial organization and we have to demonstrate the existing team gives value for money, let alone hiring more peple.

Develop Tutor. We are currently in the process of transitioning our Tutor course modules to the Adapt Learning Framework, which will add a lot of richness and interactivity to them.

The job is never finished. Everyone who works in digital accessibility knows that the ultimate aim, the only true measure of total success, is to make themselves redundant. Only when we achieve universal digital accessibility will our job be finished.

In the meantime, we’ll keep building the Knowledge Center and refining the KnowledgeBase to best meet the needs of our team members, our clients and all people with disabilities.